Tesla first started making promises about full self driving vehicles in 2013. Since then, AI models have been trained on millions of miles of driving. Surely that means they've seen every possible situation, and that full self-driving is just around the corner. So where's my self-driving car?

Let’s look at a list of some of the conditions I’ve encountered while driving or being a passenger in a vehicle. (It's a long list. When you think you’ve got the idea, you may want to skip to the next section!):

- Dazzling sun preventing vision

- Dazzling headlights preventing forward vision or rear vision.

- Thick fog - foggy enough that neither side of the road is visible.

- Drifting smoke from burning stubble.

- Policeman directing traffic with unique arm gestures and facial expressions.

- Roadworker directing traffic wearing safety vest.

- Ordinary person directing road traffic around accident.

- Roadworker directing traffic with ambiguous stop / slow sign.

- Roadworker directing traffic with stop / slow sign reversed. i.e. showing stop when it should show slow and vice versa.

- “Local traffic only” sign - do I count as local traffic?

- Road closed - police vehicles used to block road. I live there, is it really closed?

- Unsafe wide load (oncoming traffic) requiring driving onto hard shoulder and waiting for load to pass.

- Oncoming vehicle on single track road (that means one lane shared between both directions) requiring one of us to reverse into a passing space.

- Road too narrow for vehicle to fit through. (Scraped both sides on buildings!)

- Road with vehicles parked on both sides requiring pulling in to space between parked vehicles to allow vehicle travelling in the opposite direction to pass.

- Flock of sheep being moved on road by shepherd.

- Marching soldiers on road.

- Injured motor cyclist on road.

- Motorcyclist takes turn too fast and slides self and motorcycle under front of car.

- Fire hoses laid across road, firemen directing traffic.

- Traffic light failure (no lights).

- Traffic light failure (all lights permanently red).

- Snow white-out - no forward or reverse vision.

- Snow - no visible road markings due to drifting snow.

- Snow - partial drift on road.

- Car crash - avoiding debris on road.

- Roadkill - avoiding large dead animal on road.

- Roadkill - driving over small dead animal on road.

- Large wild animals on road (take evasive action or stop).

- Small wild animals on road (only take evasive action if safe to do so).

- Fallen tree blocking part of road.

- Fallen tree blocking all of road.

- Debris on road that can be safely driven over.

- Debris on road that can't be safely driven over.

- Car mechanical problem - tire failure.

- Unsafe load on vehicle ahead - drop back to give more time to react.

- Debris blowing off vehicle (dump truck) ahead and hitting vehicle.

- Ice/snow blowing off vehicle ahead.

- Drunk driver swerving dangerously from side to side ahead.

- Driver behind me attempting unsafe overtaking manoeuvre and requiring speed adjustment to allow them to cut in front of me.

- Emergency service vehicle behind.

- Unmarked police car with emergency lights behind.

- Police officer in car signalling for me to stop using hand signals.

- Non-police car signalling for me to stop with flashing headlights to indicate problem (lights failure, load unsafe, etc.)

- Non-police car signalling for me to speed with flashing headlights because they want me to drive faster than the speed limit.

- Driving on sidewalk (pavement) to avoid obstruction.

- Driving onto private property (gas station forecourt, carpark, etc.) to avoid obstruction.

- Anticipating when small child is about to run into the road.

- Anticipating when mother with push chair is about to cross the road without checking for traffic first.

- Anticipating when other driver is about to run a red light or stop sign from lack of braking.

- Anticipating when cyclist is about to ride across pedestrian crossing.

- Unsteady cyclist requiring extra wide berth to overtake safely.

- Black ice (ice which looks like a wet road surface).

- Car ahead skidding on black ice.

- Car ahead on hill sliding backwards towards us on icy surface.

- Car sliding backwards on hill on icy surface.

- Road sign significantly defaced.

- Road sign altered (legitimately).

- Improvised road signage. (e.g. “Road Closed Flooding”, “Private Road”)

- Road sign contains several lines of text indicating when it applies (school days while lights flashing, weekdays 9am to 11am, etc)

- Potholes of sufficient depth to cause tire damage and require suspension realignment

- Ruts of sufficient depth to cause the car to “bottom out” on the roadway

- Garbage bag blowing across road.

- Emergency road closures with unmarked or partially marked diversions.

- Driver turning unexpectedly across path of vehicle resulting in minor collision (park immediately where safe to do so and exchange insurance details).

- Road signs in unknown language.

- Ambiguous or confusing parking restriction signs (sigh!) which determine when and how long you can park. (Does the car know that I need to park for less than fifteen minutes or more than three hours? I know.)

- Local laws which require drivers to give way to transit buses signalling to leave a bus stop.

- Local laws defining speed limits on otherwise unmarked roads (e.g. 30 m.p.h. limit on roads with street lights, 50 km/h limits inside town limits.

- Local laws defining speed limits based on the current weather. (e.g. national speed limit is reduced by 10 km/h if it is raining).

- Broken-down car stopped in lane - no lights

- Other driver signalling that he is relinquishing priority at junction with hand gestures.

- Other driver signalling that he is relinquishing priority at junction with flashing headlights.

- Driveway entrance blocked with string, chain, or other improvised objects due to driveway resurfacing.

- Road closed using police caution tape tied across road.

- Police directing traffic (taking precedence over working traffic lights).

- Police directing traffic around accident.

- Traffic sign (Stop sign) missing or destroyed by snow plow.

- Traffic sign (Stop sign) covered with graffiti

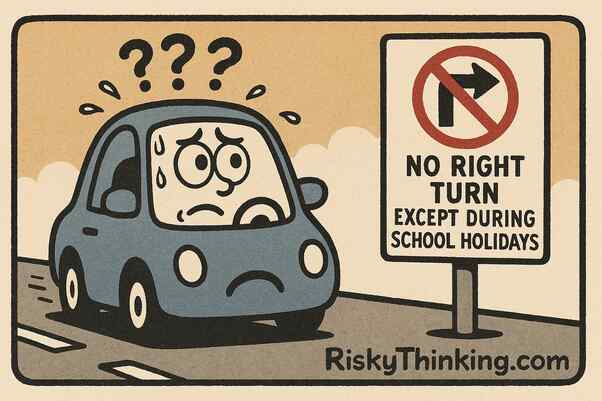

- Traffic light no right turn sign applying only between specific times on specific days.

- Generic warning signs with hand written additions (Road Flooded)

- Depth markers on side of road indicating depth of current flooding

- Road signs partially underwater

- Diversion signs which apply to specific diversions only.

- Axle limit weight restrictions

- Exceptions for “local traffic”

- Incorrect lane markings due to roadworks

- Patches which look like wet road but are actually black ice.

- Deer running into side of vehicle.

- Raccoons on the roadway.

- Skunks on the roadway.

- Large turtles crossing the road.

- Vultures feasting on the road.

- Bridges over single track roads where oncoming traffic is not visible.

Too Long; Didn’t read? Skip to this bit

I’m not a commercial driver, but I’ve encountered all except one of these at least once since I started driving. Each problem is unique. Each problem is less about the mechanics of driving and more about understanding the wider environment and taking the appropriate action. Some of these are hard problems even for a human (ever had to negotiate which way you should be turning with a police officer directing traffic? Ever had to negotiate your way past a police road block? Is it better to hit a deer or drive off the road into a ditch?)

Self driving trains work in because they operate in a highly constrained environment. Problems requiring human judgement have been mostly engineered out of the system with fences, barriers, etc. Trains travel fast, can’t stop quickly, and many lives have been lost in previous accidents. Multiple redundant safety systems are incorporated to reduce the risk of collision with another train.

A real full self-driving car needs to work in an unconstrained environment where the workings of the outside world need to be understood and taken into account. That requires a degree of general intelligence which no autonomous vehicle is likely to have in the foreseeable future. Expect driver assist, not driver replacement. Self-driving taxis will therefore continue to require the occasional remote human intervention whatever the grandiose promises being made to investors

The AI model for self-driving cars may be able to train on common conditions — but it’s the unforeseen uncommon conditions that will kill you.

Stay up to date with the free Risky Thinking Newsletter.

]]>

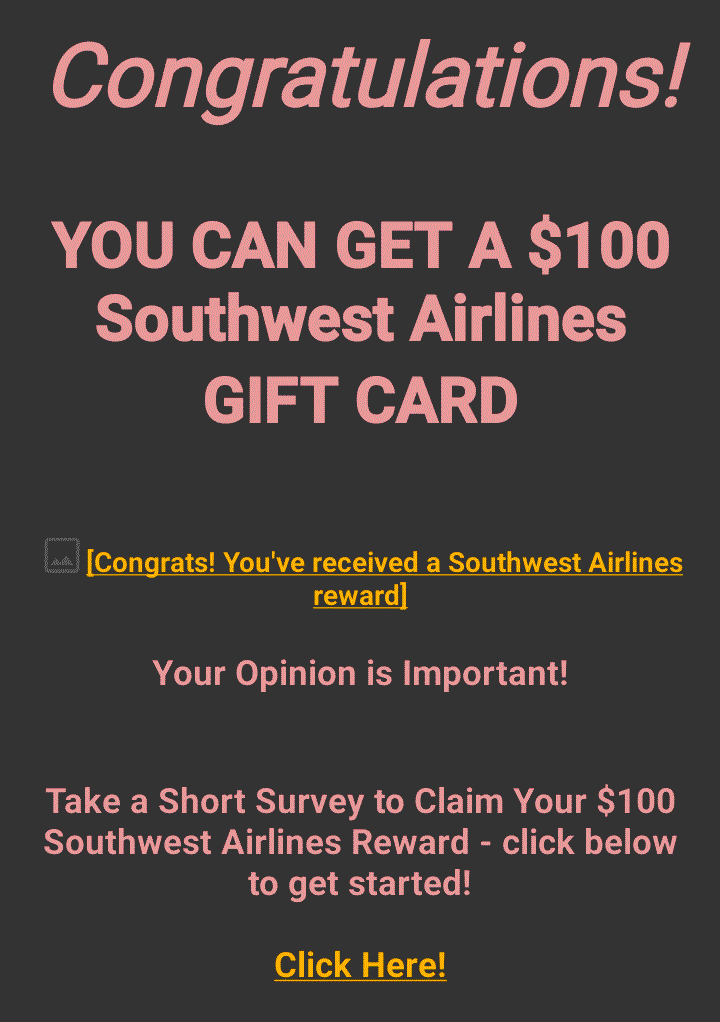

I received an email survey today. Not surprising. Many companies send them out. This one was from Southwest Airlines, and it offered me a reward of $100 for completing their survey.

I received an email survey today. Not surprising. Many companies send them out. This one was from Southwest Airlines, and it offered me a reward of $100 for completing their survey.